About me

I am a Research Scientist at Nokia Bell Labs, Cambridge, UK, specializing in multimodal data processing and machine learning. My research bridges physical modeling, signal processing, and intelligent computation, enabling systems that understand human physiology, environment, and interaction in real-world settings.

Previously, I was a Marie Curie Research Fellow at The University of Sheffield, UK, where I led an EU Horizon 2020 project on battery-free backscatter communications under the supervision of Professor Xiaoli Chu.

I received a Ph.D. in Communication and Information Systems from the University of Chinese Academy of Sciences (UCAS), China, in July 2019, supervised by Professor Yang Yang. I also earned a M.Eng. in Software Engineering from Beihang University in 2016, and a B.Eng. in Microelectronics from Anhui University in 2012.

I am always seeking new opportunities and collaborations that allow me to explore and expand the boundaries of multimodal technologies. I welcome partnerships that bridge research, innovation, and real-world impact.

Projects

Multimodal Sensing and Human-Centered Machine Learning

I design AI-enhanced wearable and earable systems that fuse multimodal data from diverse sensors, including photoplethysmography (PPG), inertial measurement units (IMUs), acoustic and temperature sensors, ultra-wideband (UWB), millimeter-wave (mmWave) radar, and virtual or augmented reality (VR/AR) interfaces to interpret physiological and behavioral states. The OmniBuds platform, developed collaboratively within our team, became a major spin-off project in 2024 and established a global research ecosystem for multimodal earable intelligence. I also lead projects such as EarFusion, SPATIUM, and mmHvital, which advance embedded machine learning, signal-quality-aware fusion, and spatiotemporal context modeling for health perception and human–computer interaction.

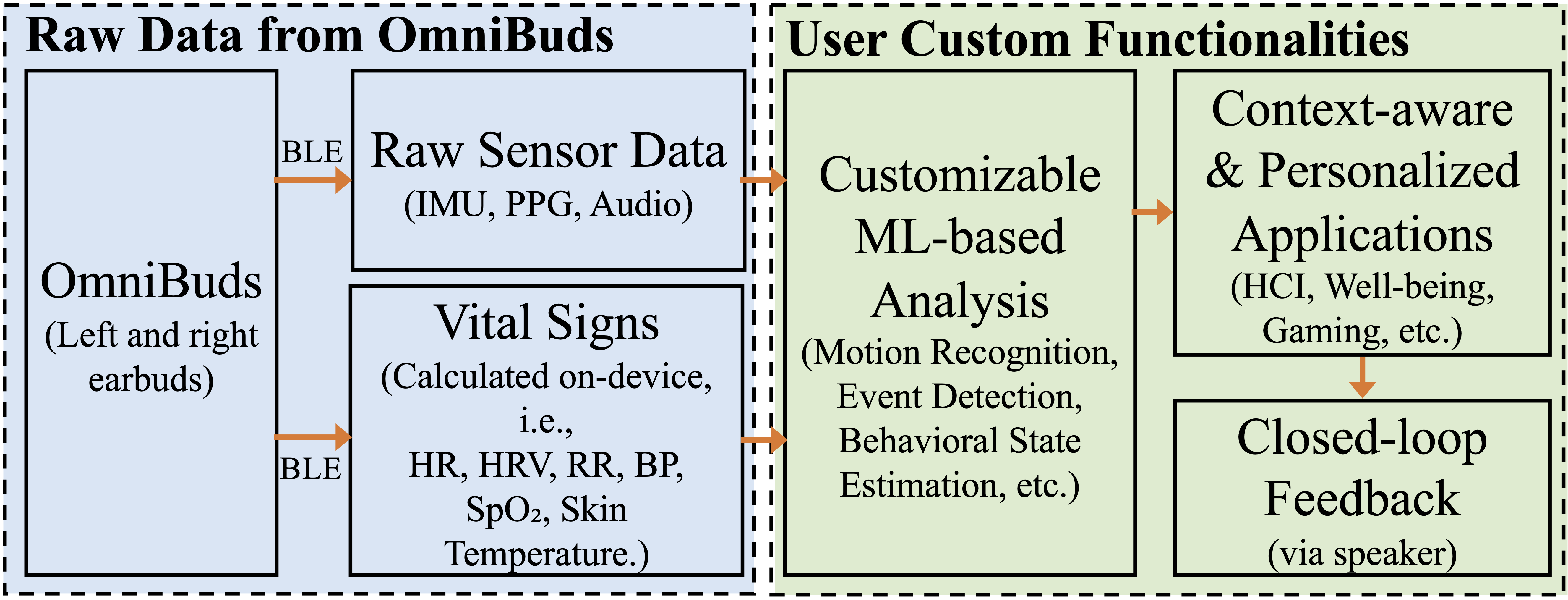

OmniBuds: A Sensory Earable Platform for Multimodal Bio-Sensing and On-Device Machine Learning [paper]

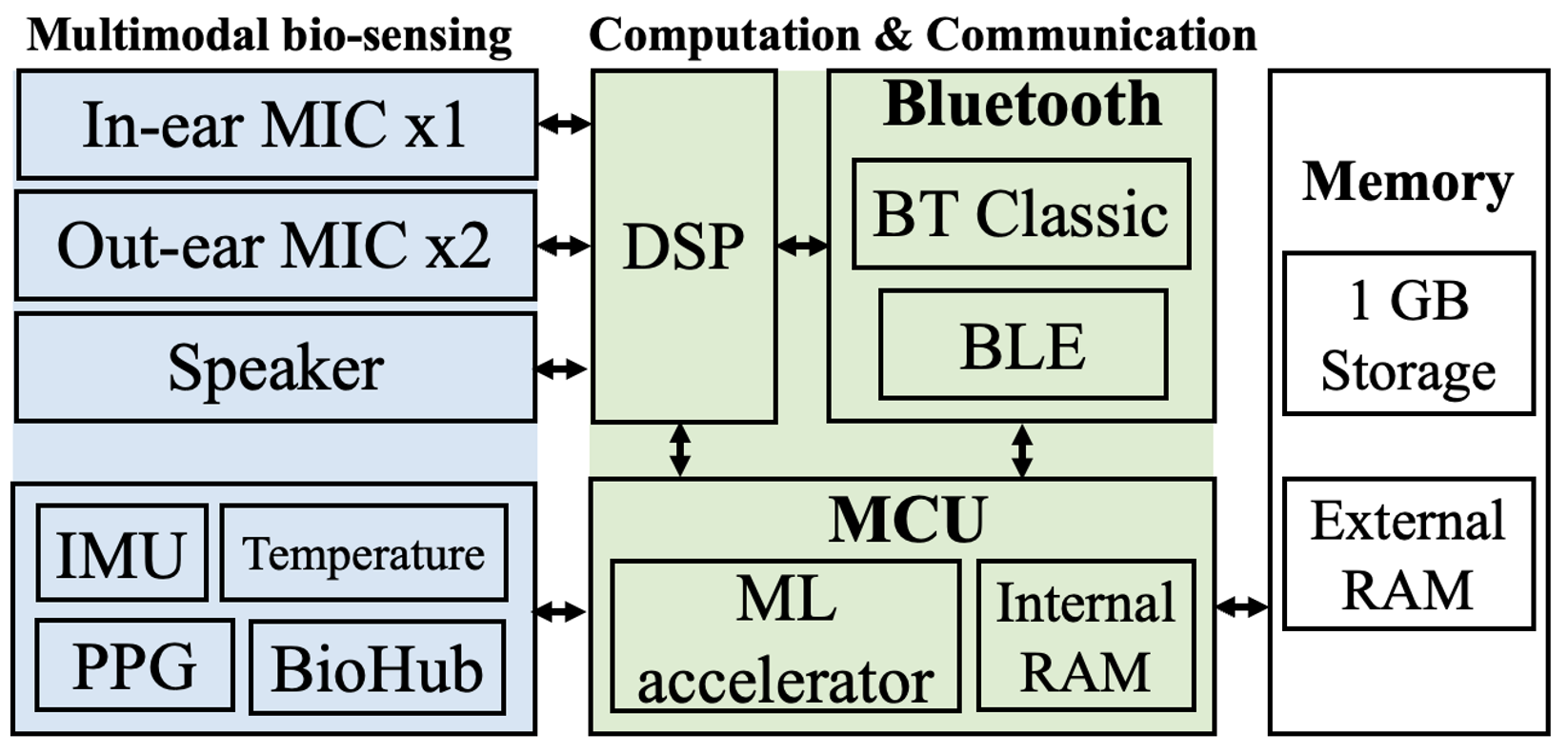

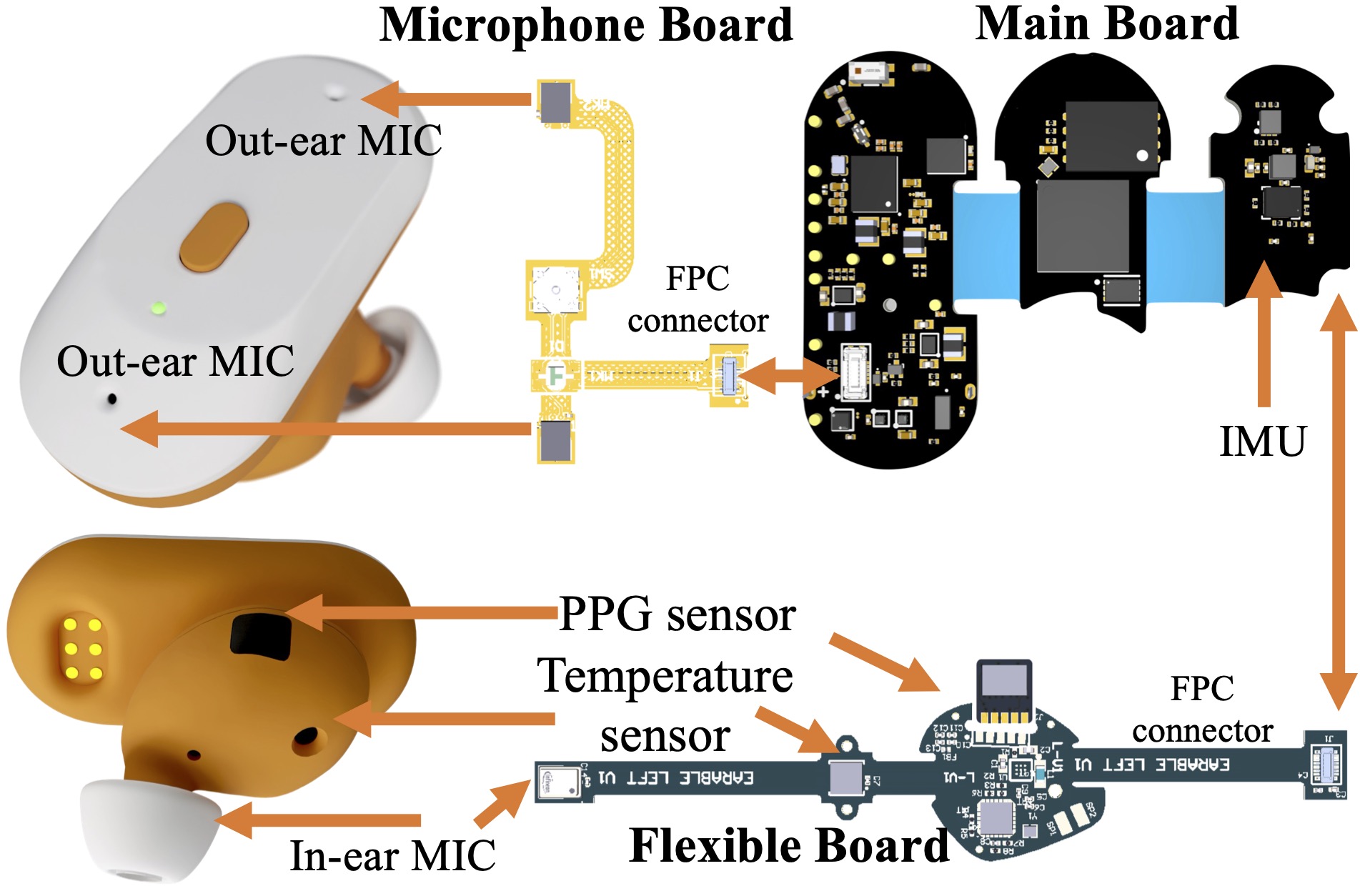

We proposed OmniBuds, an advanced AI-powered earbud and earable research platform that became a successful spin-off project in 2024. OmniBuds represents a major effort to bridge consumer-grade audio technology with scientific research, enabling multimodal bio-sensing and on-device machine learning within a compact, wearable form factor. The first wave of distribution has reached over 30 universities across more than 10 countries, establishing OmniBuds as a global open platform that empowers the research community to explore next-generation earable intelligence.

OmniBuds integrates a comprehensive suite of sensors, including PPG for cardiovascular monitoring, 9-axis inertial measurement units (IMUs), which include a 3-axis accelerometer, 3-axis gyroscope, and 3-axis magnetometer for motion and spatial tracking, skin temperature sensors, and multiple microphones (one inward-facing and two outward-facing) for both in-ear physiological sensing and ambient acoustic capture.

Each earbud is equipped with an embedded machine learning accelerator that supports efficient, low-power computation for real-time multimodal data analysis. Built upon a True Wireless Stereo (TWS) design, OmniBuds also retains standard audio functionalities such as active noise cancellation (ANC), transparency mode, music playback, and phone calls, providing both research flexibility and everyday usability.

OmniBuds Web: https://www.omnibuds.tech/

The OmniBuds project has received broad media attention for its innovation in AI-powered earable sensing and on-device machine learning.

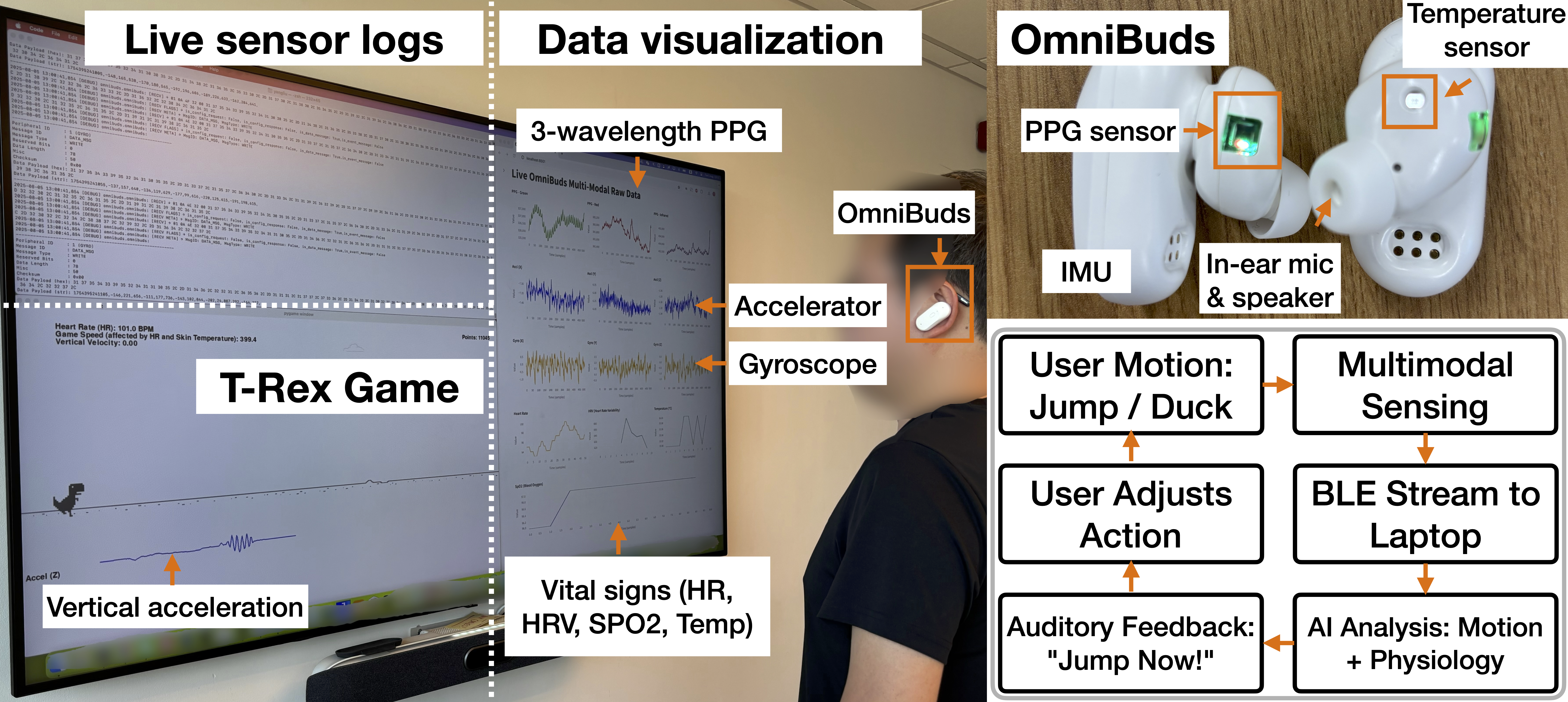

OmniBuds Demo: AI-Powered Multimodal Sensing for Human Enhancement [paper]

[Demonstrated at ACM MobiCom 2025, Hong Kong, China]

In this demo, OmniBuds act as an AI-enhanced human–machine interface to control the Chrome T-Rex game. Head and body motions detected through IMU data trigger jumping and ducking actions. A real-time visualization dashboard displays raw IMU and PPG waveforms, derived vital signs, and gameplay feedback. The AI assistant analyses user performance, which includes reaction time, movement quality, fatigue indicators, and heart rate and provides adaptive auditory cues such as “Jump/Duck now!” through the earbuds.

As shown in the right figure below, the system architecture integrates multimodal sensing, machine learning based analysis, and personalized feedback loops. The AI layer fuses physiological and motion data from OmniBuds to enable adaptive, context-aware applications such as gaming and human–computer interaction.

This demo aims to demonstrate how multimodal learning and physiological inference can enhance human performance and engagement in interactive tasks.

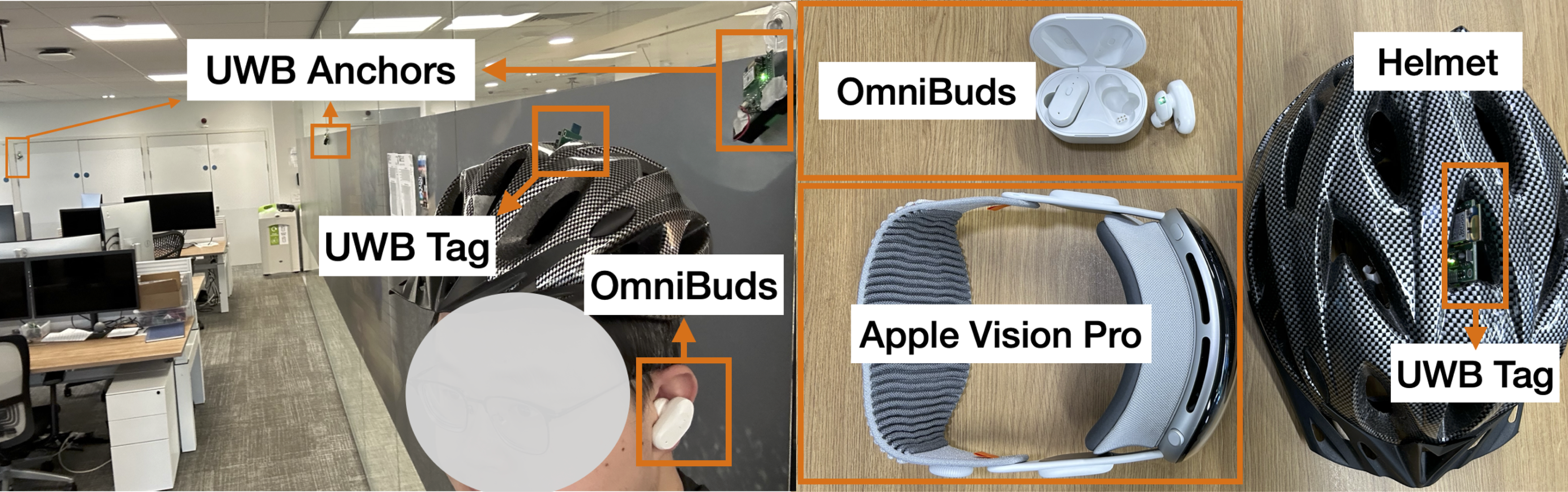

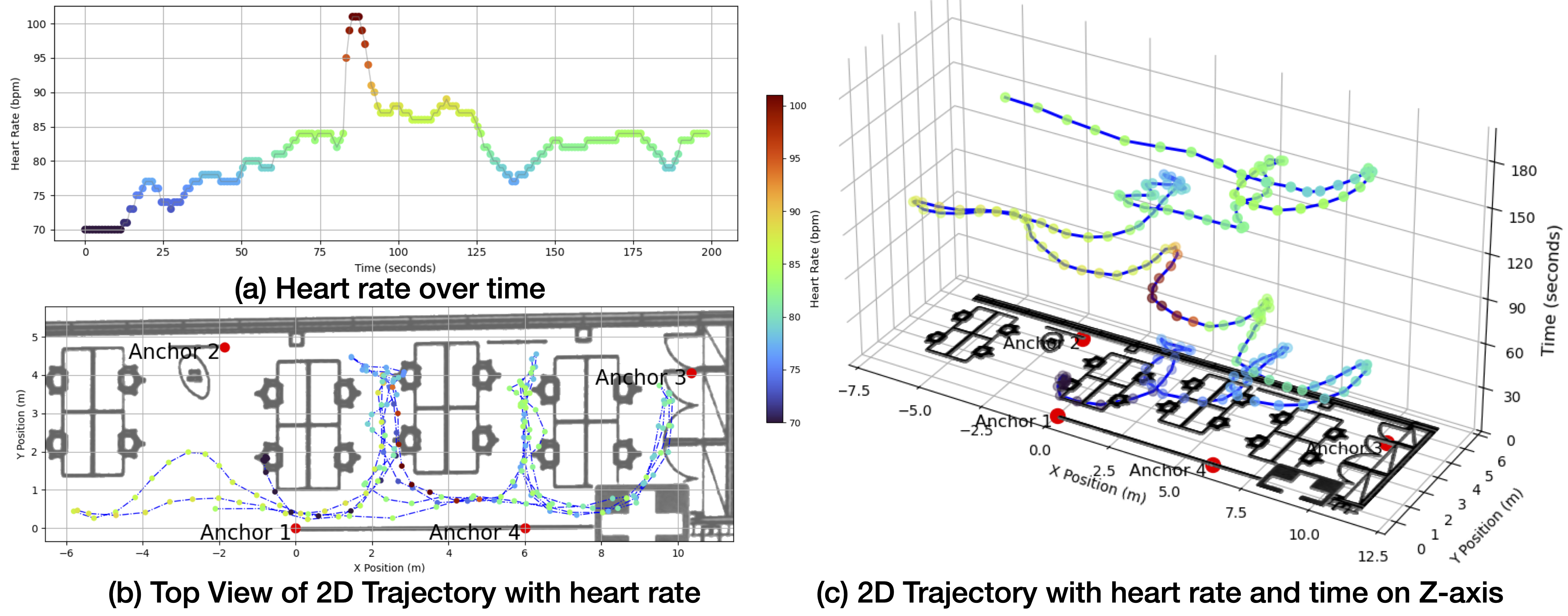

SPATIUM: A Framework for Multimodal, LLM-Enhanced, and Spatiotemporal Machine Learning in Human-Centered Health Applications [paper]

SPATIUM redefines digital health by fusing physiological sensing, spatial intelligence, and context-aware machine learning into one cohesive framework.

It bridges the gap between body and environment to capture heart rate, HRV, respiration, and temperature from OmniBuds, while integrating Ultra-Wideband (UWB) indoor localization and environmental data such as light, sound, and temperature. The result is an immersive spatiotemporal health map rendered in 2D, 3D, and Apple Vision Pro environments, enabling intuitive visualization of how the body responds to its surroundings in real time.

Powered by large language models (LLMs), SPATIUM can interpret multimodal data, explain physiological changes within environmental context, and generate personalized insights and alerts in natural language.

A demo for the spatiotemporal visualization is shown in the video below.

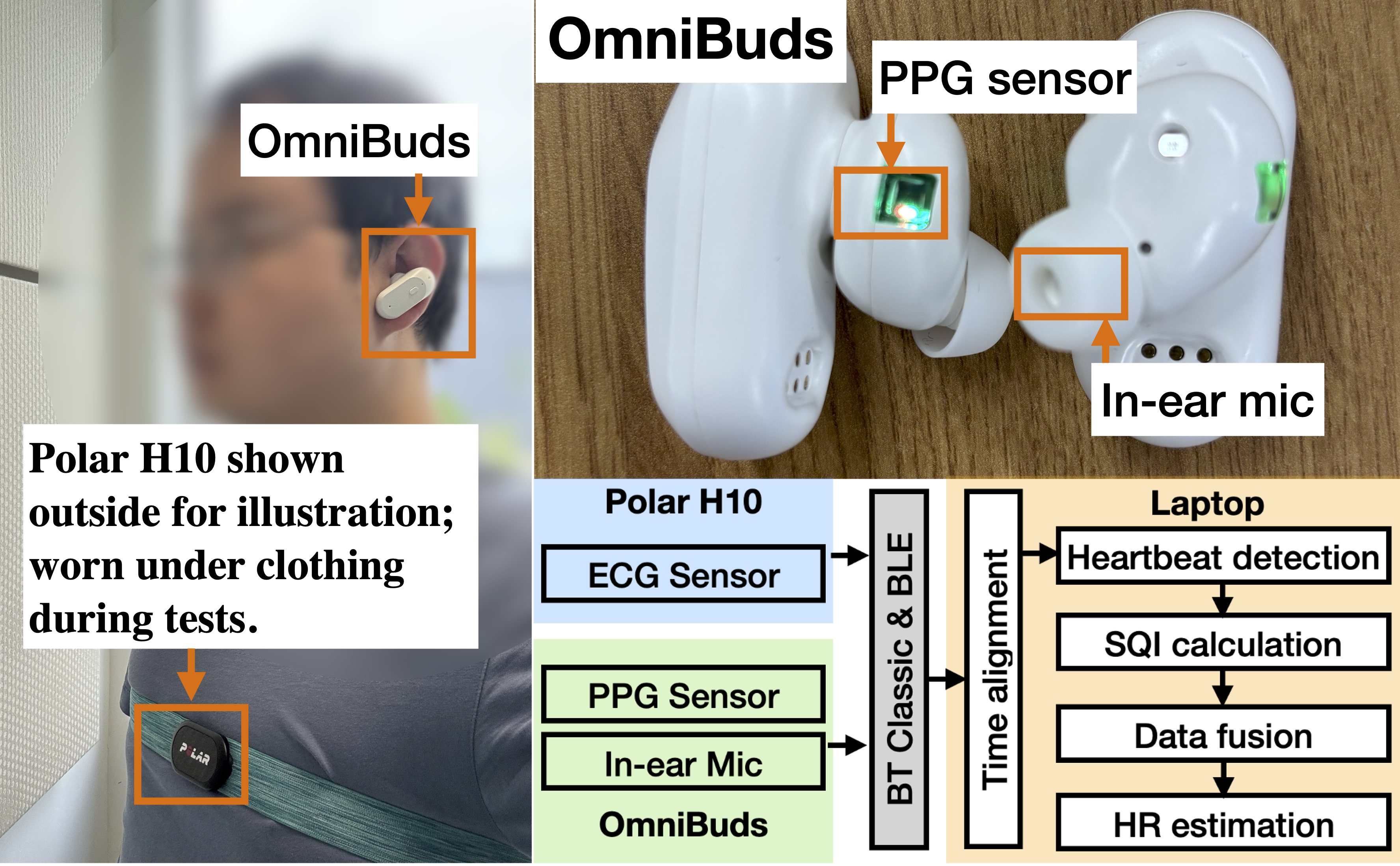

EarFusion: Quality-Aware Fusion of In-Ear Audio and Photoplethysmography for Heart Rate Monitoring [paper]

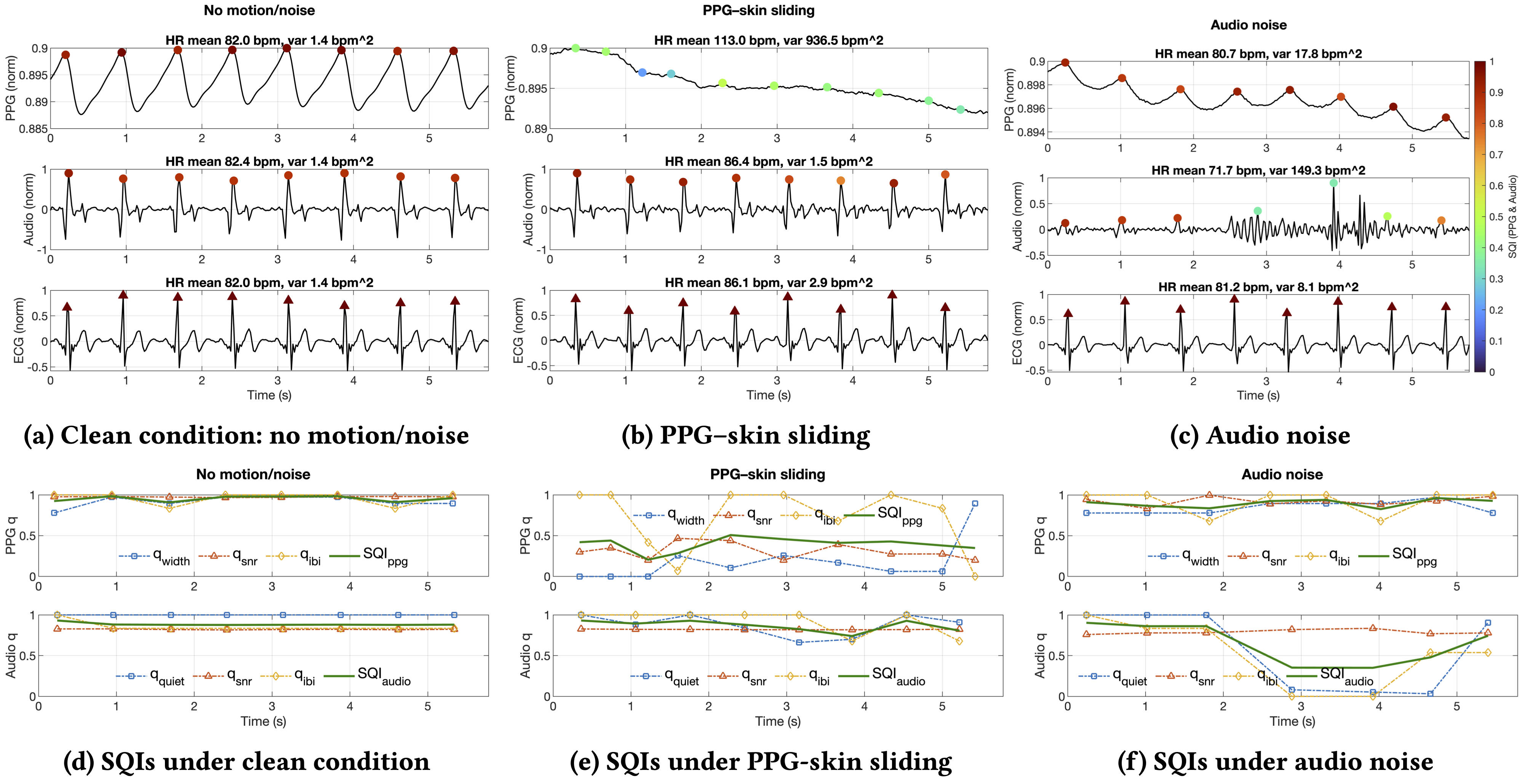

EarFusion explores how in-ear audio and PPG, which includes two physiologically complementary modalities and can be dynamically fused for robust heart rate (HR) monitoring in real-world environments.

While PPG is widely used in wearables, it is easily corrupted by motion and skin–sensor variability. In contrast, in-ear audio sensing captures cardio-mechanical vibrations that are resilient to motion but sensitive to acoustic noise. EarFusion bridges these limitations through signal quality–aware fusion that adapts in real time to the reliability of each modality.

Built on the OmniBuds earable platform, EarFusion integrates an in-ear microphone, optical PPG sensor, and reference ECG (Polar H10). The system computes modality-specific fine-grained meterics and Signal Quality Indices (SQIs) which capture SNR, morphology, and rhythm consistency and then are used to fuse HR estimates based on their reliability. Experimental results across multiple participants and conditions show that EarFusion consistently outperforms single-modality baselines, achieving stable HR estimation under both motion and acoustic interference.

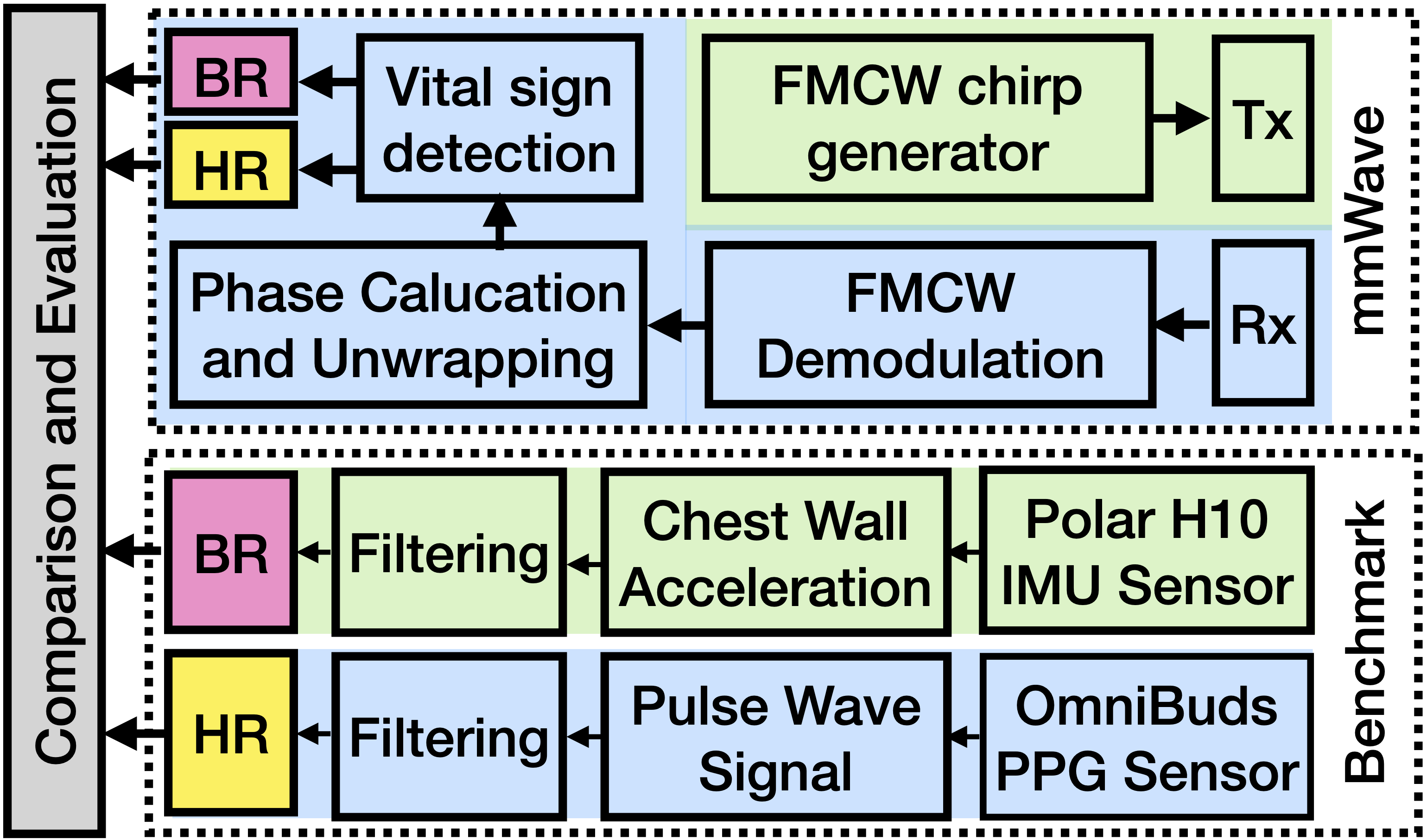

mmHvital: A Study on Head-Mounted mmWave Radar for Vital Sign Monitoring [paper]

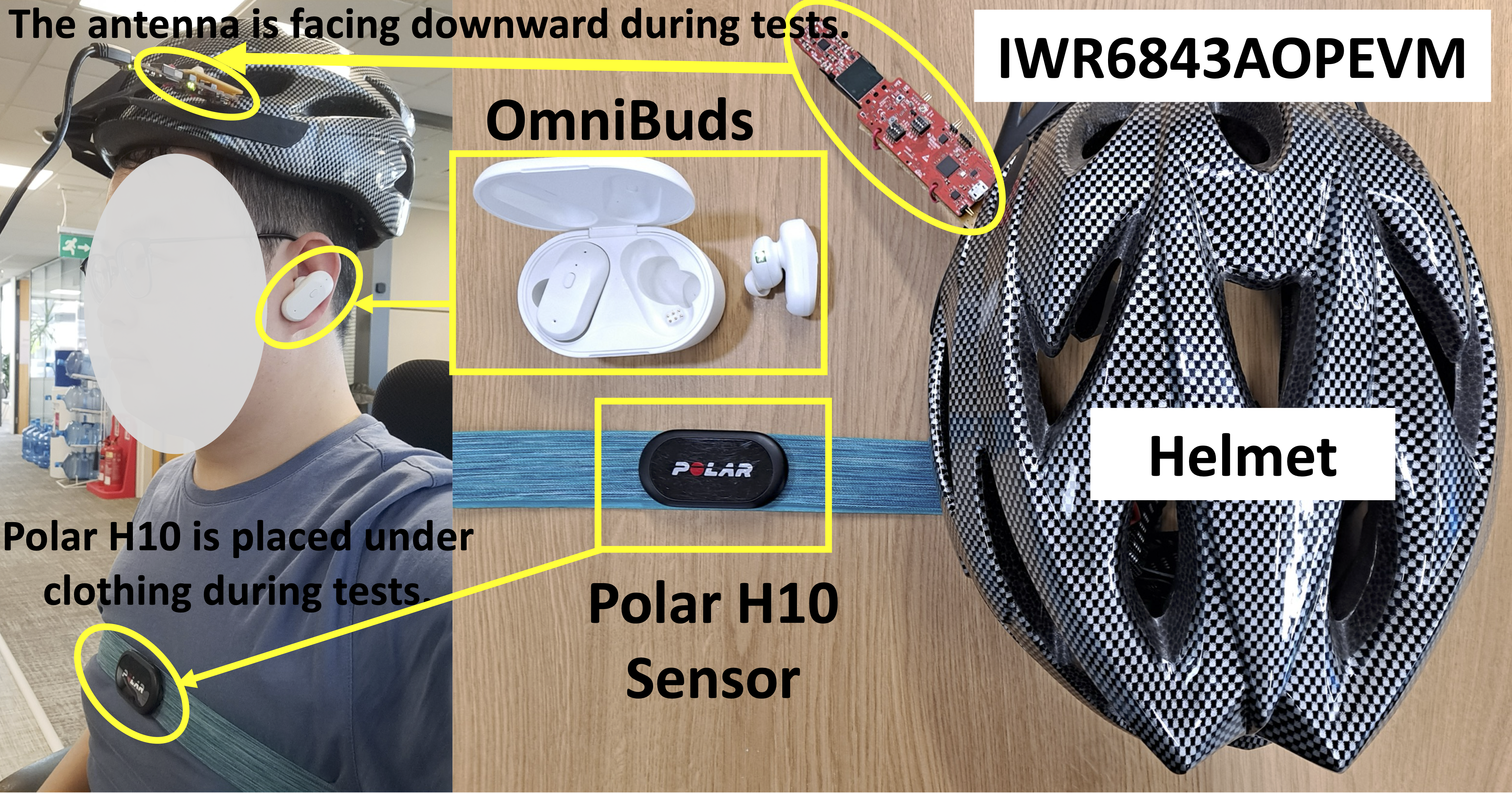

As wearable sensing evolves toward non-contact and mobility-friendly designs, mmHvital explores how head-mounted mmWave radar can monitor vital signs such as heart rate (HR) and respiration rate (RR) without chest-level placement. The motivation stems from the limitations of traditional radar setups, which require fixed, front-facing positioning and make them unsuitable for natural, mobile usage in daily scenarios.

In this work, I designed and built the first head-mounted mmWave sensing prototype for vital sign monitoring, integrating a TI IWR6843AOP radar into a helmet alongside OmniBuds and Polar H10 sensors for benchmarking.

The system investigates how sensor position, user posture, and chest motion visibility affect radar-based HR and RR estimation. To move beyond accuracy-only metrics, I introduced VITAL-KL, a new waveform-similarity metric that quantifies differences in respiratory and cardiac signal morphology between modalities. Results show that front-facing placements achieve high similarity and up to 97% HR accuracy, while rear placements degrade performance due to motion obstruction, which provide design insights for future wearable radar systems, highlighting the feasibility of embedding mmWave sensing into natural form factors such as helmets, glasses, and AR headsets.

Wireless: Speech, Audio, and Radio

I work on physics-informed and data-driven wireless technologies augmented with neural networks to advance speech, audio, and radio sensing, and lead several research projects in this direction. LPCSE demonstrate how classical physical and rule-based models can be embedded within end-to-end machine learning frameworks to achieve efficient, interpretable, and physically grounded intelligence. Projects on Auditory Attention decoding and otoacoustic emission analysis build on physiological and auditory principles to interpret received signals and extract informative features, enabling data-driven learning and inference. The PAMT project studies multipath-induced phase distortion across acoustic frequencies and introduces a real-time metric and mitigation strategy, achieving millimetre-level acoustic ranging and motion tracking with commercial microphones and speakers. The Radio and Acoustic Backscatter project develops ultra-low-power wireless sensor nodes that harvest energy from ambient radio waves and embed acoustic information into backscattered RF signals.

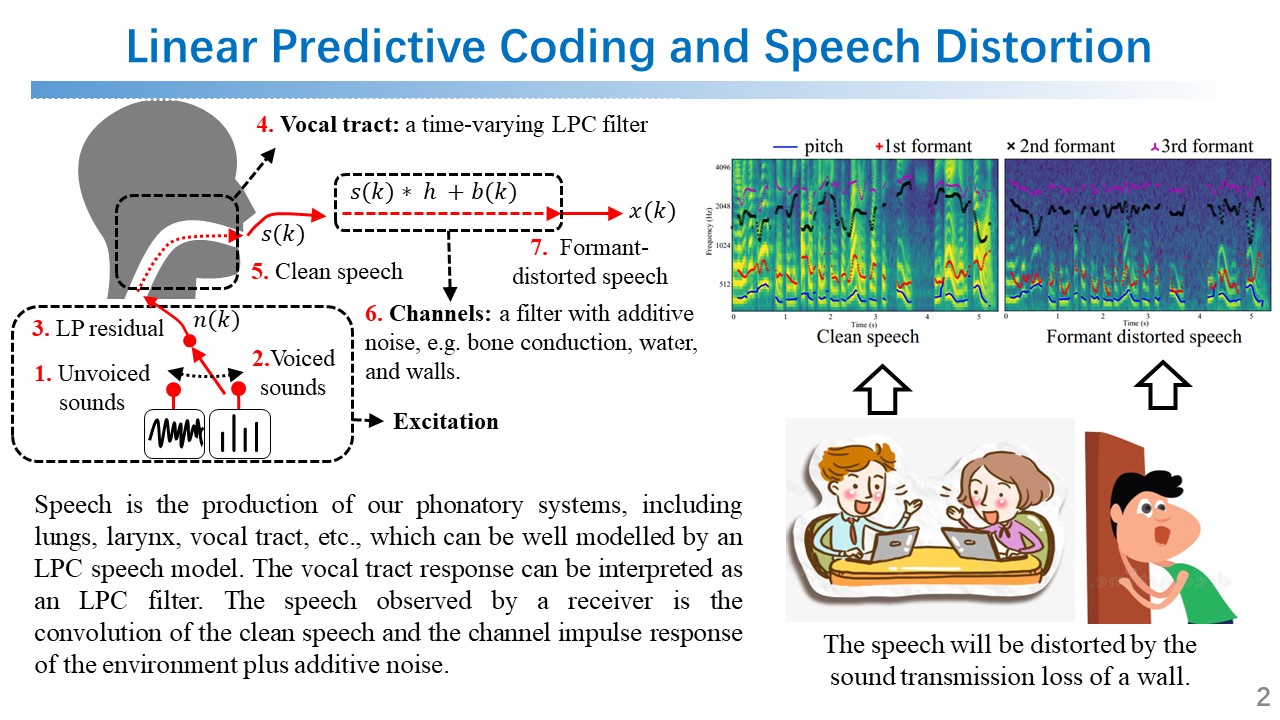

LPCSE: Neural Speech Enhancement through Linear Predictive Coding [paper]

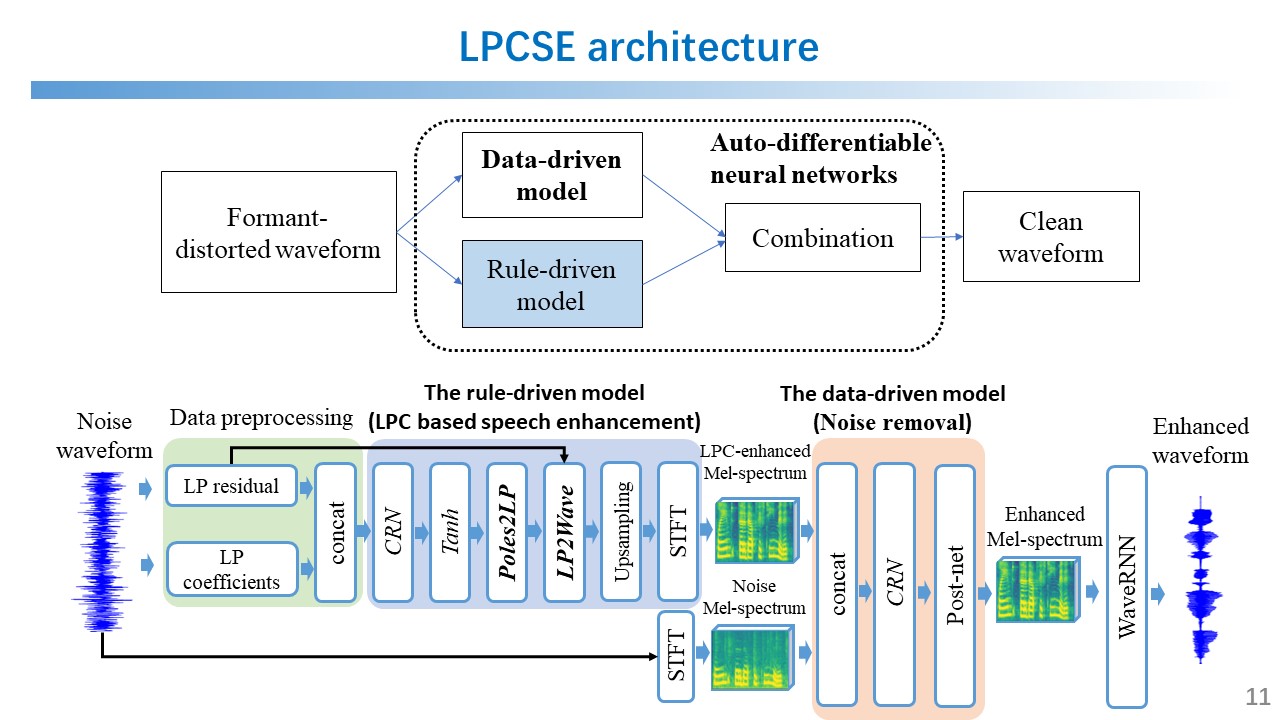

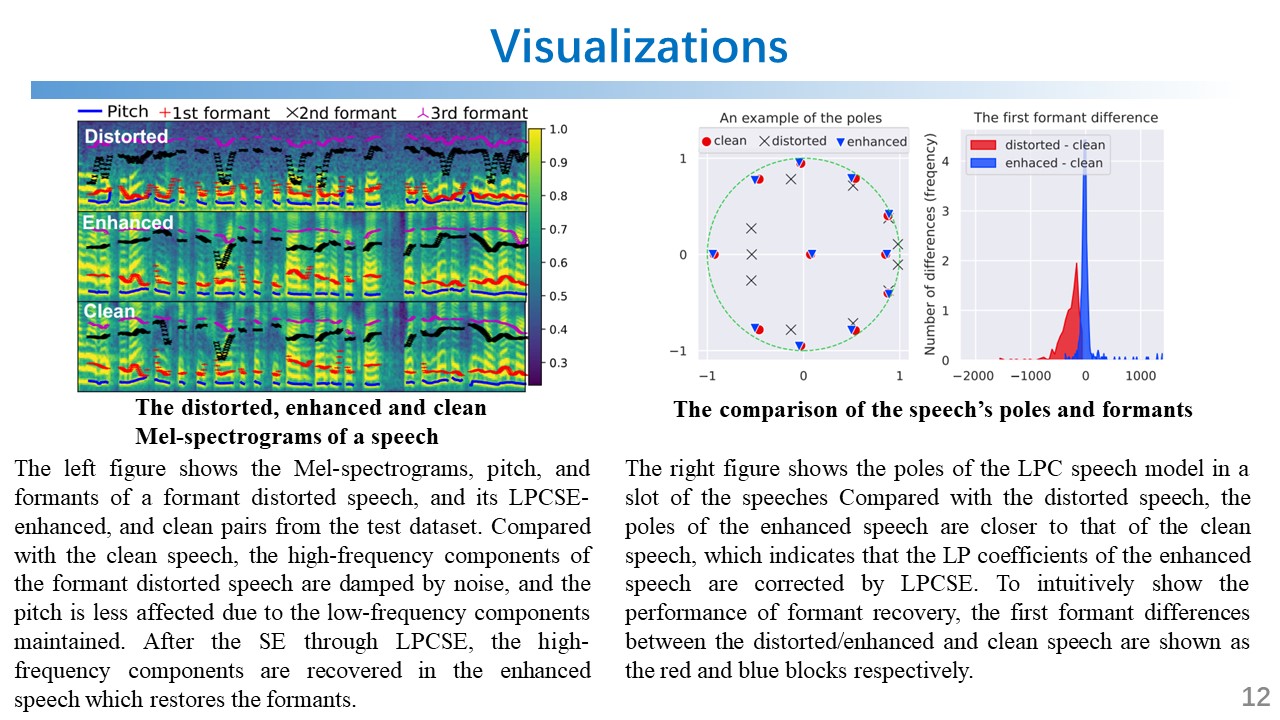

I proposed a new speech enhancement (SE) architecture called LPCSE that combines classic signal processing technologies, i.e., Linear Predictive Coding (LPC), with neural networks in the auto-differentiable machine learning frameworks, as shown in the figures below. The proposed architecture could leverage the strong inductive biases in the classic speech models in conjunction with the expressive power of neural networks. To achieve this work, I also studied the speech synthesis and enhancement technologies, such as speech vocoders (WaveNet WaveRNN, MelGAN HiFi-GAN, etc.), denoising (SEGAN, U-net, etc.), voice conversion (AutoVC, StarGAN-VC, etc.), and expert-rule inspired speech and audio synthesis (LPCNet, DDSP, etc.).

A brief introduction to this project is shown in the video below.

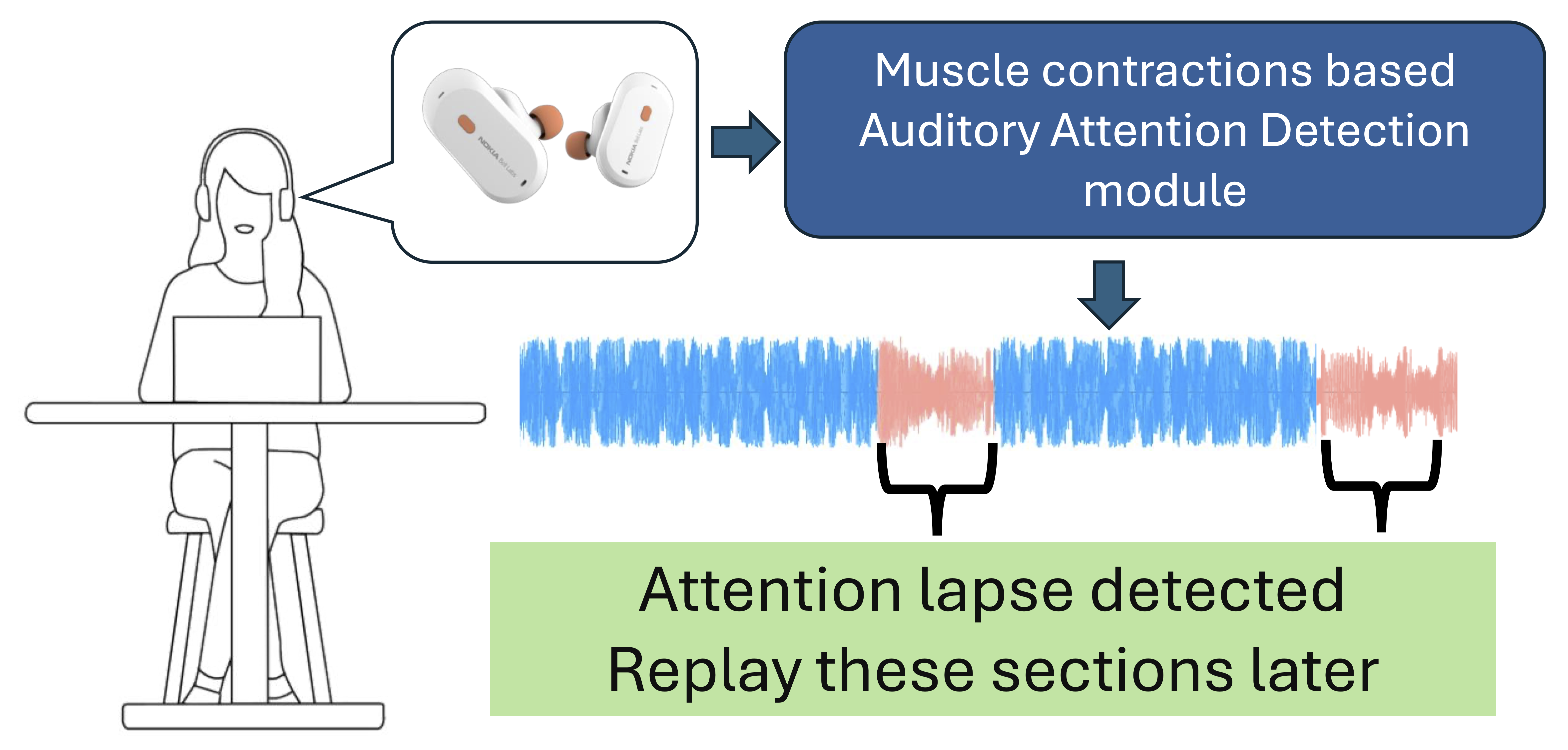

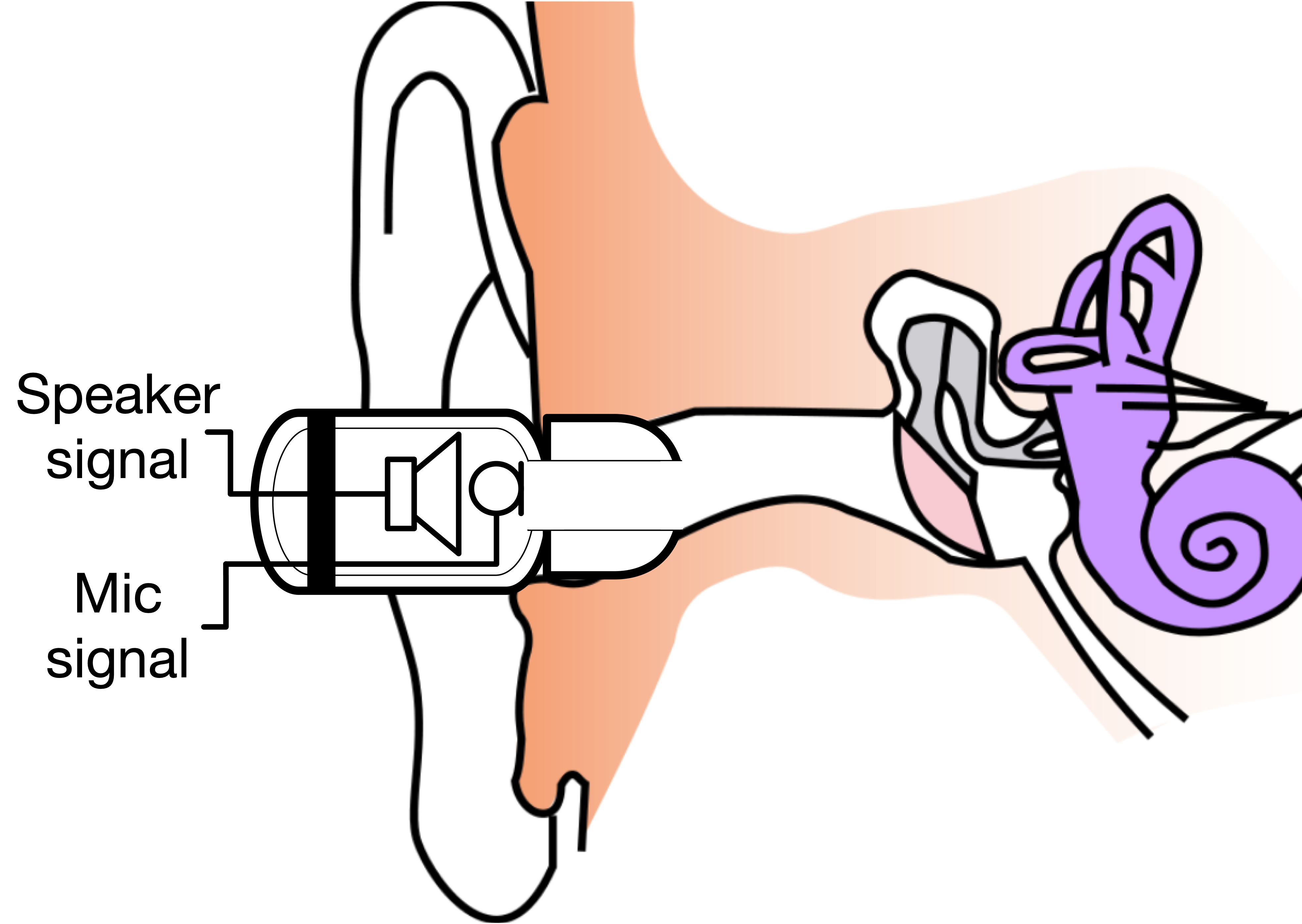

Auditory Attention from In-Ear Muscle Contractions Using Commodity Earbuds [paper]

This project explores the feasibility of detecting auditory attention shifts through subtle in-ear muscle contractions, using commodity earbuds. By leveraging ultrasound signals emitted and received by the built-in speaker–microphone pair, the system detects minute canal deformations correlated with attention changes. This enables continuous, unobtrusive monitoring of user attention without interrupting normal listening. A phase-based signal processing pipeline extracts micro-displacement features, while a heartbeat-aware classification stage distinguishes attentional states. Results from controlled user studies confirm that the system can reliably detect attention lapses, demonstrating the potential of cognitive-aware earables.

Social Media Mentions:

Earbuds and Echoes: A New Way to Track Auditory Attention

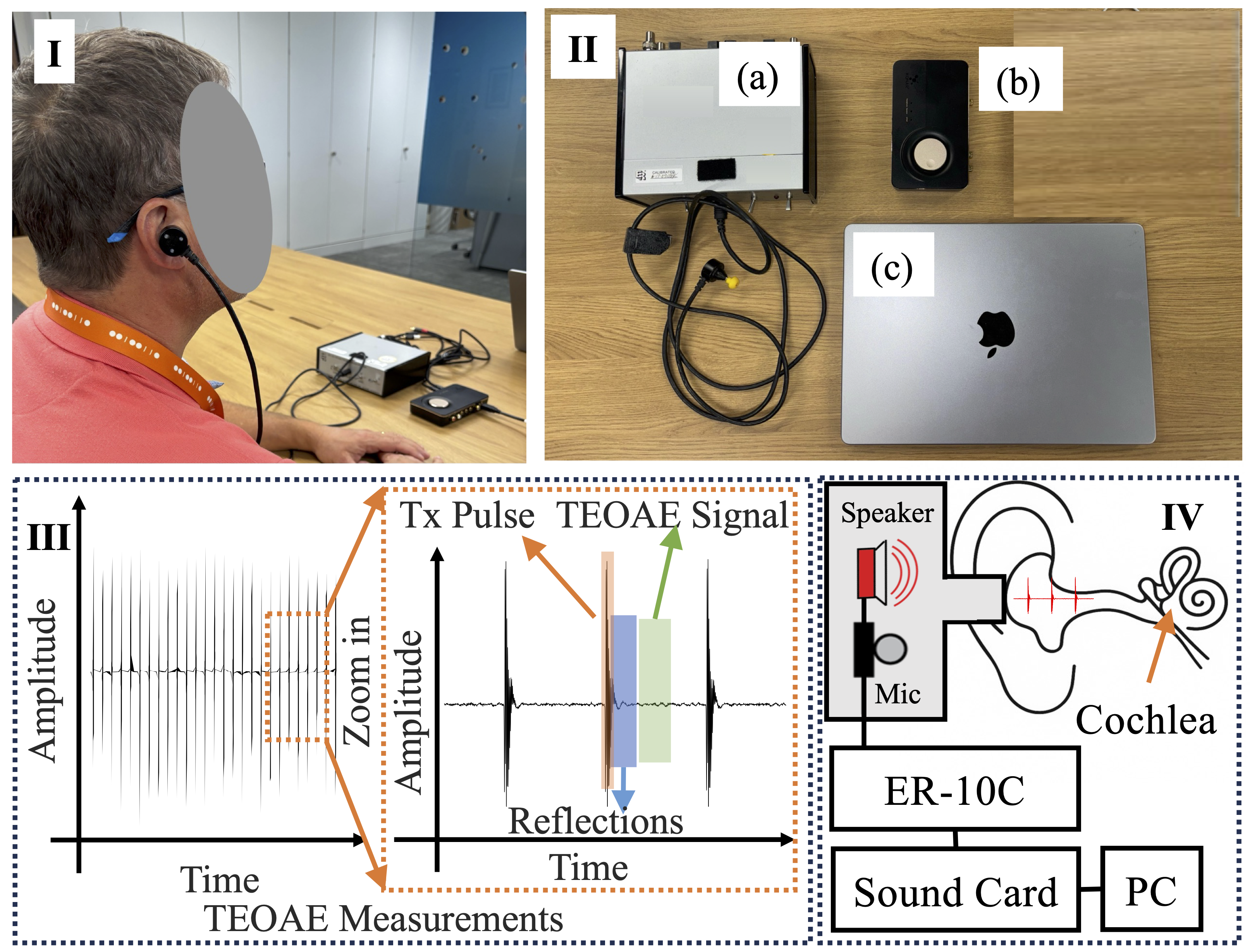

TEOAE Measurement Beyond Clinical Settings: Intermittent Acquisition and Computation in Everyday Audio [To appear]

This work investigates how Transient Evoked Otoacoustic Emissions (TEOAEs), a standard non-invasive hearing assessment technique, can be extended beyond traditional clinical setups into everyday listening environments.

We propose a framework that intermittently embeds TEOAE click stimuli within natural audio content such as podcasts, enabling passive hearing screening without requiring dedicated hardware or clinical supervision. A controlled pilot study was conducted to analyze listener perception and measurement fidelity across varying click densities. Results show that lower stimulus density is preferred by listeners for reduced perceptual intrusion, while maintaining sufficient TEOAE signal quality for reliable estimation. This approach found a promising pathway toward media-integrated auditory screening systems, paving the way for unobtrusive and accessible hearing health monitoring using commodity devices.

Experimental Setup for TEOAE Measurement

(I) Participant with ER-10C probe, (II) Hardware chain, (III) Time-domain TEOAE waveform, (IV) Measurement schematic.

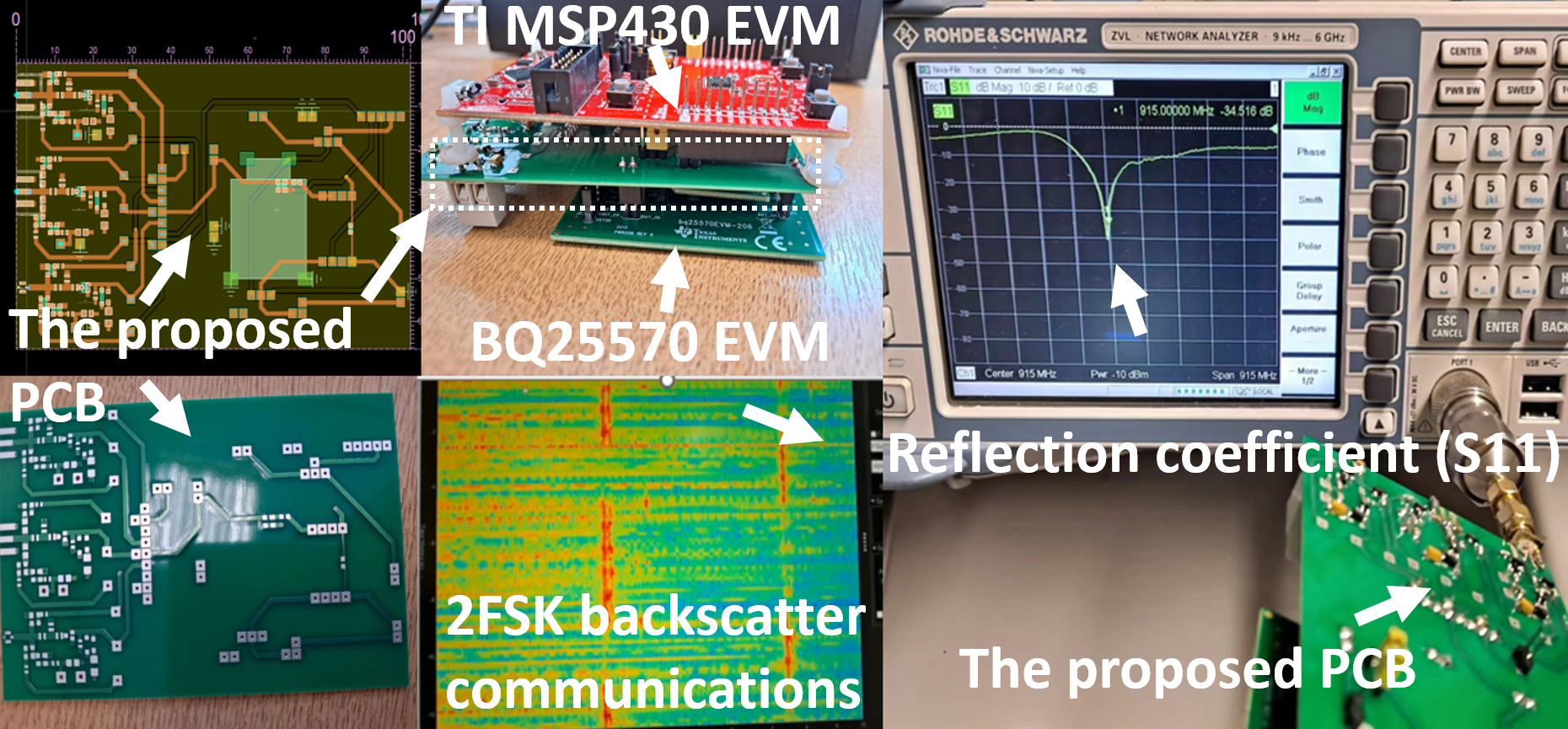

Battery-free Wireless Sensor Nodes for Raido and Acoustics Backscatter Communications [paper]

I led a project on battery-free wireless sensor nodes for backscatter communications. The proposed node harvests energy from RF signals at RFID frequency bands, and then reflects the RF signals embedded with the raw signals from analog sensors, e.g., nanogenerators, microphones and piezo sensors. The node enables microwatt-level uplink and downlink communications to an RFID Reader at a distance of a couple of meters.

As an example of application scenarios, the battery-free nodes are deployed in hazardous environments, .e.g, caves, forests and oceans. A low-altitude drone equipped with an RFID Reader collects the data from the nodes by flying along the nodes one by one. The battery-free design in wireless sensor networks improves the scalability and lifetime of wireless sensor nodes by removing the cost of battery maintenance.

The figures below show the PCB and node design, S11 and the received spectrums of the 2-FSK signals in backscatter communications. The reflection coefficient from the proposed circuit (S11) in the first figure is measured by a Rohde & Schwarz network analyzer, which indicates the circuit has good impedance matching at the target frequency (915 MHz). In this project, Keysight ADS is used for impedance matching, schematic and PCB design, and the Large-Signal S-Parameters (LSSP) and harmonic balance simulation. Ettus USRP is used to evaluate the system’s performance. An ultra-low power chip (TI MSP430) supports power management in energy harvesting and wireless modulation and demodulation in backscatter communications.

Battery-free Wireless Sensor Node Design and Test |  PCB Design and Assembly |

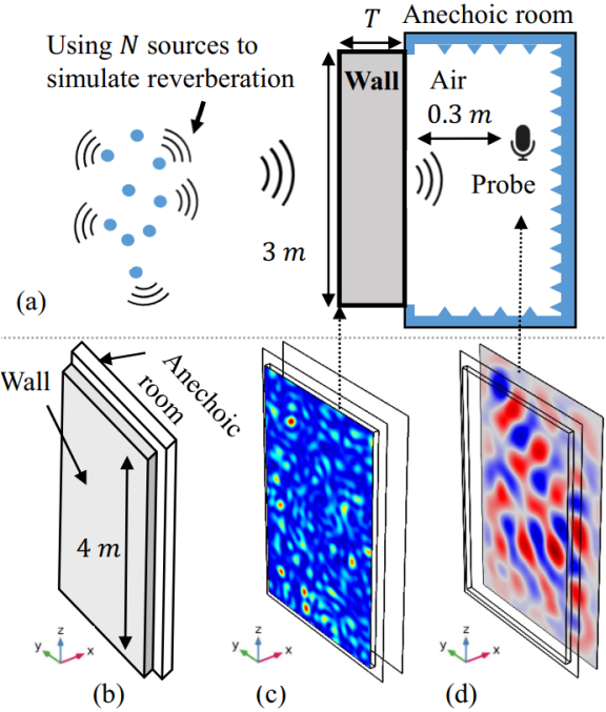

PAMT: Phase-based Acoustic Ranging and Motion Tracking in Multipath Fading Environments (IEEE INFOCOM) [paper]

Accurate and low-cost motion tracking is essential for mobile interaction, VR/AR applications, and human–computer interfaces. Traditional systems often rely on specialized hardware such as cameras or IMUs, which are sensitive to occlusion or require line-of-sight. To overcome these limitations, I explored acoustic phase sensing as a new modality for precise ranging and motion tracking using only built-in microphones and speakers on commercial mobile devices.

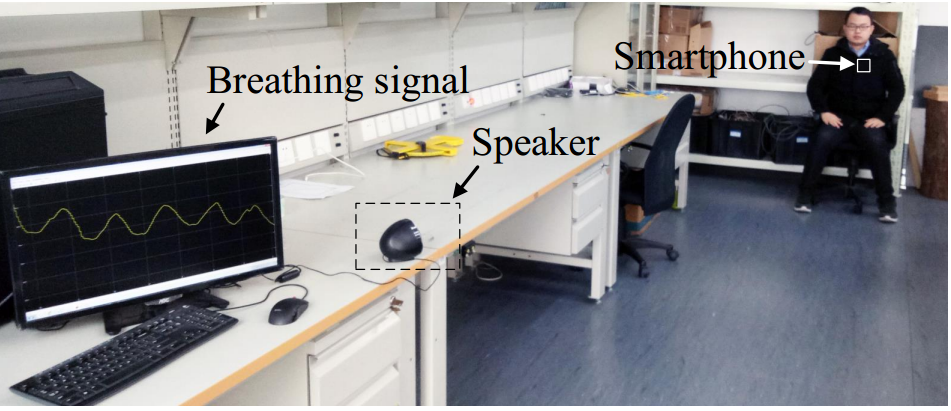

- I proposed and implemented a fine-grained ranging system on commercial mobile devices (e.g., smartphones). The key idea is that the acoustic phase is used to achieve accurate distance measurement. A prototype system of ranging is implemented on a standard Android smartphone, which could monitor human breathing, as shown in the figures below.

Based on the ranging method above, I proposed and implemented a fine-grained and low-cost acoustic motion-tracking method called PAMT for mobile interaction. The proposed method allows mobile users to interact with a computer by using a gesture interface in practical indoor environments. The system could provide economical and flexible navigation and gesture recognition for VR/AR users.

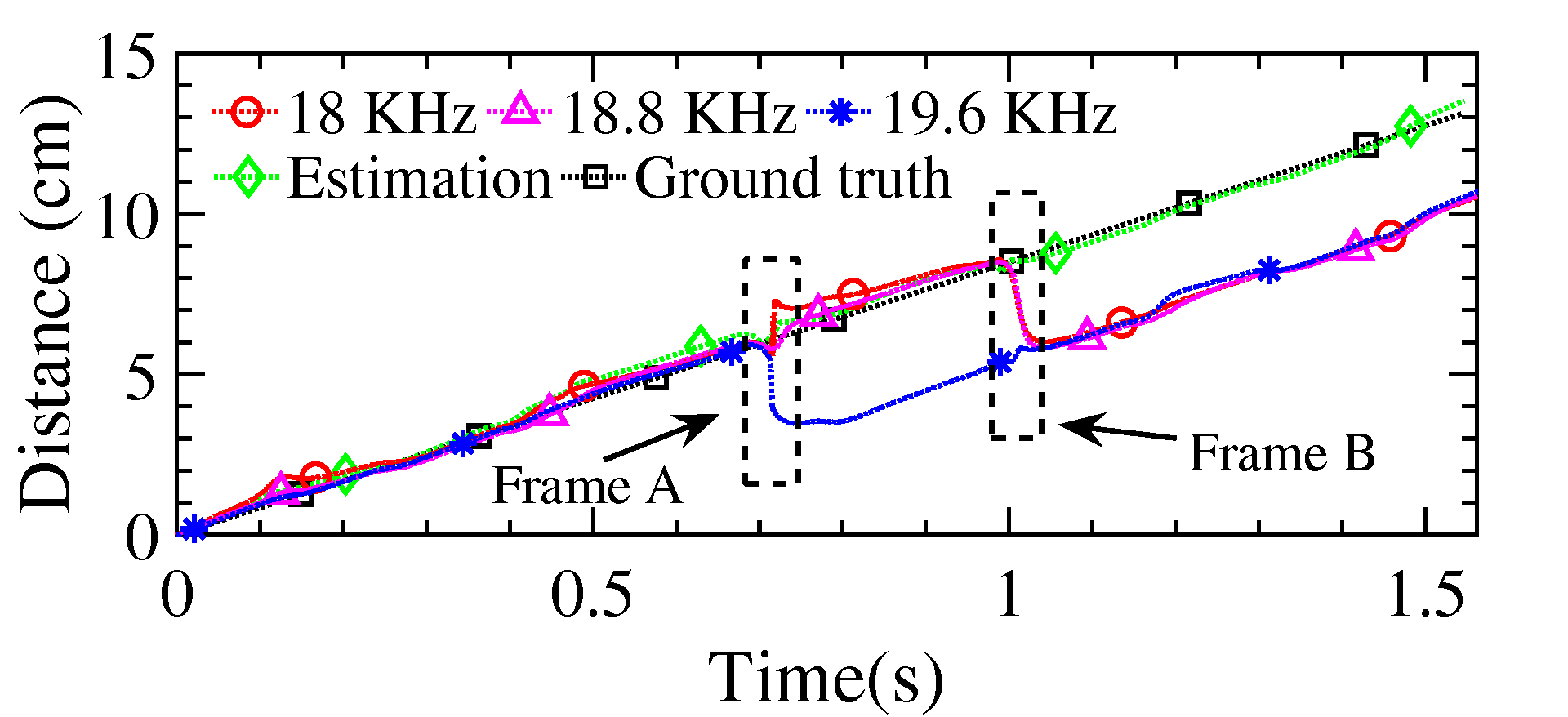

In practical indoor environments, it’s challenging to obtain accurate moving distance change based on the phase change due to the attenuation and reflection of acoustic signals. To address this challenge, I proposed a metric to identify the impact of multipath fading in real time. Based on the metric, I proposed a multipath interference mitigation method through the diversity of acoustic frequencies, in which the actual moving distance is calculated by combining the moving distances measured at different frequencies, as shown in the figures below. Experiment results show the measurement errors are less than 2 mm and 4 mm in one-dimensional and two-dimensional scenarios, respectively.

Real-time Multipath Fading Feature Visualization of a newly proposed feature that reflects dynamic multipath fading conditions. |  Distance Measurement Across Frequencies Demonstration of motion tracking based on frequency-dependent phase variations. |

A demonstration is shown in the video below.

A brief introduction to this project is shown in the video below.

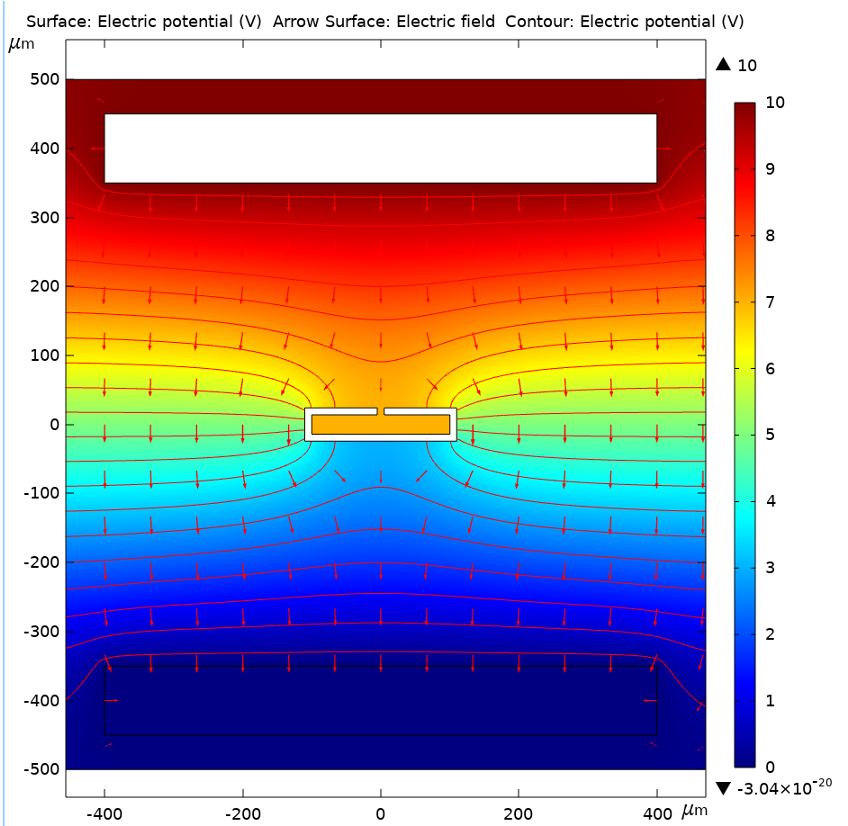

Multiphysics Simulation

To strengthen data-driven models with physical realism, I employ finite element simulations using tools such as COMSOL Multiphysics and ANSYS Fluent to study piezoelectric stress, acoustic propagation, and fluid–structure coupling. Through these simulations, I investigate how energy propagates, transforms, and couples across diverse materials, geometries, boundary conditions, and environments.

These studies form the physics-informed foundation of my vision for machine learning research, which bridging the gap between physical constraints and real-world applications, a challenge that has become increasingly relevant in the era of large generative models. By embedding physical principles into learning architectures, physics-informed models can guide AI systems toward a deeper understanding of the physical world and more reliable generalization across domains.

The following examples illustrate selected multiphysics simulations from my previous work.

Publications

Yang Liu, Fahim Kawsar, and Alessandro Montanari, “Demo: A Real-Time Multimodal Sensing and Feedback System for Closed-Loop Wearable Interaction Using OmniBuds,” Proceedings of ACM MobiCom’25 - the 31st Annual International Conference on Mobile Computing and Networking, 2025. [paper]

Yang Liu, and Alessandro Montanari, “EarFusion: Quality-Aware Fusion of In-Ear Audio and Photoplethysmography for Heart Rate Monitoring,” Proceedings of ACM IASA’25 - the 3rd ACM International Workshop on Intelligent Acoustic Systems and Applications (co-located with ACM MobiCom’25), 2025. [paper]

Yang Liu, SiYoung Jang, Alessandro Montanari, and Fahim Kawsar, “SPATIUM: A Context-Aware Machine Learning Framework for Immersive Spatiotemporal Health Understanding,” Proceedings of ACM NetAISys’25 - the 3rd International Workshop on Networked AI Systems (co-located with ACM MobiSys’25), 2025. [paper]

Liu Yang, Xiaofei Li, Xinheng Wang, Yang Liu, Naizheng Jia, Xinwei Gao, Shuyu Li, and Zhi Wang, “SwimSafe: A Smartphone-based Acoustic System for Water-Depth Detection and Hazard Alert,” IEEE Sensors Journal, vol. 25, no. 15, pp. 28919-28933, 2025. [paper]

Yang Liu, Fahim Kawsar, and Alessandro Montanari, “mmHvital: A Study on Head-Mounted mmWave Radar for Vital Sign Monitoring,” Proceedings of ACM HumanSys’25 - the 3rd International Workshop on Human-Centered Sensing, Modeling, and Intelligent Systems (co-located with ACM SenSys’25), 2025. [paper]

Yang Liu, Alessandro Montanari, Ashok Thangarajan, Khaldoon Al-Naimi, Andrea Ferlini, Ananta Narayanan Balaji, and Fahim Kawsar, “Demo: Multimodal Bio-Sensing and On-Device Machine Learning: Advancing Health Perception with OmniBuds,” Proceedings of ACM SenSys’25 - the 23rd ACM Conference on Embedded Networked Sensor Systems, 2025. [paper]

Harshvardhan Takawale, Yang Liu, Khaldoon Al-Naimi, Fahim Kawsar, and Alessandro Montanari, “Towards Detecting Auditory Attention from in-Ear Muscle Contractions using Commodity Earbuds,” Proceedings of ICASSP 2025 - IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 1-5, IEEE, 2025. [paper]

Jiatao Quan, Khaldoon Al-Naimi, Xijia Wei, Yang Liu, Fahim Kawsar, Alessandro Montanari, and Ting Dang, “Cognitive Load Monitoring via Earable Acoustic Sensing,” Proceedings of ICASSP 2025 - IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 1-5, IEEE, 2025. [paper]

Irtaza Shahid, Khaldoon Al-Naimi, Ting Dang, Yang Liu, Fahim Kawsar, and Alessandro Montanari, “Towards Enabling DPOAE Estimation on Single-Speaker Earbuds,” Proceedings of ICASSP 2024 - IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 246-250, IEEE, 2024. [paper]

Alessandro Montanari, Ashok Thangarajan, Khaldoon Al-Naimi, Andrea Ferlini, Yang Liu, Ananta Narayanan Balaji, and Fahim Kawsar, “OmniBuds: A Sensory Earable Platform for Advanced Bio-Sensing and On-Device Machine Learning,” arXiv preprint arXiv:2410.04775, 2024. [paper]

Na Tang, Yang Liu, Xiaoli Chu, Ian F. Akyildiz, “Design, Modeling and Analysis of Underwater Acoustic Backscatter Communications,” Proceedings of IEEE ICC (International Computer Communications) Conf., Denver, Colorado, USA, June 2024. [paper]

Zhiyu Liu, Yang Liu, and Xiaoli Chu, “Reconfigurable-Intelligent-Surface-Assisted Indoor Millimeter-Wave Communications for Mobile Robots,” IEEE Internet of Things Journal, vol. 11, no. 1, pp. 1548-1557, Jan. 1, 2024. [paper]

Yang Liu, Na Tang, Xiaoli Chu, Yang Yang, and Jun Wang, “LPCSE: Neural Speech Enhancement through Linear Predictive Coding,” Proceedings of GLOBECOM 2022 - 2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 2022, pp. 5335-5341, 2022. [paper]

Yang Liu, Wuxiong Zhang, Yang Yang, Weidong Fang, Fei Qin, and Xuewu Dai, “RAMTEL: Robust Acoustic Motion Tracking using Extreme Learning Machine for Smart Cities,” IEEE Internet of Things Journal, 2019. [paper]

Yang Liu, Wuxiong Zhang, Yang Yang, Weidong Fang, Fei Qin, and Xuewu Dai, “PAMT: Phase-based Acoustic Motion Tracking in Multipath Fading Environments,” Proceedings of IEEE Conference on Computer Communications 2019 (INFOCOM’19). (Rank A, acceptance rate: 19.7%). [paper]

Yang Liu, Yang Yang, Weidong Fang, and Wuxiong Zhang, “Demo: Phase-based Acoustic Localization and Motion Tracking for Mobile Interaction,” Proceedings of ACM Multimedia conference (ACM MM’18). [paper]

Yang Liu, Yubing Wang, Weiwei Gao, Wuxiong Zhang, and Yang Yang, “A Novel Low-Cost Real-Time Power Measurement Platform for LoWPAN IoT Devices,” Mobile Information Systems, 2017.[paper]

Weidong Fang, Wuxiong Zhang, Wei Chen, Yang Liu, and Chaogang Tang, “TMSRS: Trust Management-based Secure Routing Scheme in Industrial Wireless Sensor Network with Fog Computing,” Wireless Networks, 2019. [paper]

Weidong Fang, Wuxiong Zhang, Yang Yang, Yang Liu, and Wei Chen, “A resilient trust management scheme for defending against reputation time-varying attacks based on BETA distribution,” Science China Information Sciences, 2017. [paper]